Deep Learning Week 4 Nptel Assignment Answers

Are you looking for NPTEL Deep Learning Week 4 Assignment 4 Answers? You’ve come to the right place! Access the most accurate answers at Progiez.

Table of Contents

NPTEL Deep Learning Week 4 Assignment 4 Answers (Jan-Apr 2025)

Q1. A given cost function is of the form J(θ)=62−6θ+2θ2J(\theta) = 62 – 6\theta + 2\theta^2. What is the weight update rule for gradient descent optimization at step t+1t+1? Consider α=0.01\alpha = 0.01 to be the learning rate.

a) θt+1=θt−0.01(20θ−1)\theta_{t+1} = \theta_t – 0.01(20\theta – 1)

b) θt+1=θt+0.01(20θ)\theta_{t+1} = \theta_t + 0.01(20\theta)

c) θt+1=θt−(20θ−1)\theta_{t+1} = \theta_t – (20\theta – 1)

d) θt+1=0−0.01(6−1)\theta_{t+1} = 0 – 0.01(6 – 1)

Q2. Can you identify in which of the following graphs gradient descent will not work correctly?

a) First figure

b) Second figure

c) First and second figure

d) Fourth figure

Q3. From the following two figures, can you identify which one corresponds to batch gradient descent and which one to stochastic gradient descent?

a) Graph-A: Batch gradient descent, Graph-B: Stochastic gradient descent

b) Graph-B: Batch gradient descent, Graph-A: Stochastic gradient descent

c) Graph-A: Batch gradient descent, Graph-B: Not Stochastic gradient descent

d) Graph-A: Not batch gradient descent, Graph-B: Not Stochastic gradient descent

Q4. Suppose for a cost function J(θ)=0.256J(\theta) = 0.256 as shown in the graph below, in which point do you feel the magnitude of weight update will be more? θ\theta is plotted along the horizontal axis.

a) Red point (Point 1)

b) Green point (Point 2)

c) Yellow point (Point 3)

d) Red (Point 1) and yellow (Point 3) have the same magnitude of weight update

Q5. Which logic function can be performed using a 2-layered Neural Network?

a) AND

b) OR

c) XOR

d) All

Q6. Let X and Y be two features to discriminate between two classes. The values and class labels of the features are given. What is the minimum number of neuron-layers required to design the neural network classifier?

a) 1

b) 2

c) 4

d) 5

Q7. Which among the following options gives the range for a logistic function?

a) -1 to 1

b) -1 to 0

c) 0 to 1

d) 0 to infinity

Q8. The number of weights (including bias) to be learned by the neural network having 3 inputs and 2 classes and a hidden layer with 5 neurons is:

a) 12

b) 15

c) 20

d) 32

Q9. For an XNOR function as given in the figure below, the activation function of each node is given. Consider X1=1X_1 = 1 and X2=0X_2 = 0, what will be the output for the above neural network?

a) 1.5

b) 2

c) 0

d) 1

Q10. Which activation function is more prone to the vanishing gradient problem?

a) ReLU

b) Tanh

c) Sigmoid

d) Threshold

Course Link: Click Here

These are NPTEL Deep Learning Week 4 Assignment 4 Answers

Q1. A given cost function is of the form J(0) = 202-40+2? What is the weight update rule for gradient descent optimization at step t+1? Consider, a=0.01 to be the learning rate.

a. 8t+1=0 -0.01 (201)

b. 0+1 = 0 + 0.01 (20)

c. Ot+1=0t (201)

d. 0+1 = 0 0.04(0 – 1)

Answer: d. 0+1 = 0 0.04(0 – 1)

Q2. Which of the following activation function leads to sparse activation maps?

a. Sigmoid

b. Tanh

c. Linear

d. ReLU

Answer: d. ReLU

These are NPTEL Deep Learning Week 4 Assignment 4 Answers

Q3. If yi is the ground truth label for ith training data, p, is the predicted label. For a binary class classification, which of the following is a feasible loss function for training a neural net. C is the number of training data

a. -E-1 yi log p₁ – E-1(1 – y₁) log(1 – p₁)

b. -E1Y₁ log p₁ – E-₁ yi log(1 – pt) гс

c. E 1 yi log(1 – P₁) – Σ₁(1 – y₁) log(p;)

d. E (1-y₁) log p₁ – E-₁ yi log(1 – p₁)

Answer: a. -E-1 yi log p₁ – E-1(1 – y₁) log(1 – p₁)

Q4. Which logic function cannot be performed using a single-layered Neural Network?

a. AND

b. OR

c. XOR

d. All

Answer: c. XOR

These are NPTEL Deep Learning Week 4 Assignment 4 Answers

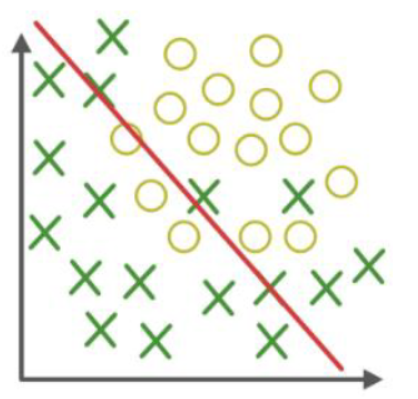

Q5. Which of the following options closely relate to the following graph? Green cross are the samples of Class-A while mustard rings are samples of Class-B and the red line is the separating line between the two class.

a. High Bias

b. Zero Bias

c. Zero Bias and High Variance

d. Zero Bias and Zero Variance

Answer: a. High Bias

Q6. Which of the following statement is true?

a. L2 regularization lead to sparse activation maps

b. L1 regularization lead to sparse activation maps

c. Some of the weights are squashed to zero in L2 regularization

d. L2 regularization is also known as Lasso

Answer: b. L1 regularization lead to sparse activation maps

These are NPTEL Deep Learning Week 4 Assignment 4 Answers

Q7. Which among the following options give the range for a tanh function?

a. -1 to 1

b. -1 to 0

c. 0 to 1

d. 0 to infinity

Answer: a. -1 to 1

These are NPTEL Deep Learning Week 4 Assignment 4 Answers

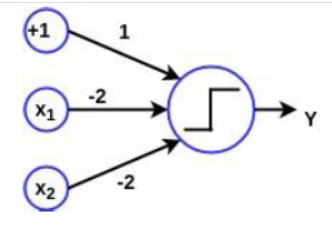

Q8. Consider the following neural network shown in the figure with inputs x1, x2 and output Y. The inputs take the values x₁, x₁ € {0,1}. The logical operation performed by the network is

a. AND

b. OR

c. XOR

d. NOR

Answer: d. NOR

These are NPTEL Deep Learning Week 4 Assignment 4 Answers

Q9. When is gradient descent algorithm certain to find a global minima?

a. For convex cost plot

b. For concave cost plot

c. For union of 2 convex cost plot

d. For union of 2 concave cost plot

Answer: a. For convex cost plot

These are NPTEL Deep Learning Week 4 Assignment 4 Answers

Q10. Let X=[-1, 0, 3, 5] be the the input of ith layer of a neural network. On this, we want to apply softmax function. What should be the output of it?

a. [0.368, 1, 20.09, 148.41]

b. [0.002, 0.006, 0.118,0.874]

c. [0.3, 0.05,0.6,0.05]

d. [0.04,0,0.06,0.9]

Answer: b. [0.002, 0.006, 0.118,0.874]

These are NPTEL Deep Learning Week 4 Assignment 4 Answers

More weeks of Deep Learning: Click Here

More Nptel Courses: https://progiez.com/nptel