Deep Learning Week 5 Nptel Assignment Answers

Course Name: Deep Learning

Course Link: Click Here

Table of Contents

NPTEL Deep Learning Week 5 Assignment Answers (Jan-Apr 2025)

- Suppose a fully-connected neural network has a single hidden layer with 30 nodes. The input is represented by a 3D feature vector, and we have a binary classification problem. Calculate the number of parameters of the network. Consider there are NO bias nodes in the network.

A. 100

B. 120

C. 140

D. 125

View Answer

- For a binary classification setting, if the probability of belonging to class = +1 is 0.22, what is the probability of belonging to class = -1?

a. 0

b. 0.22

c. 0.78

d -0.22

View Answer

3) Input to SoftMax activation function is [2, 4, 6]. What will be the output?

A. [0.11, 0.78, 0.11]

B. [0.016, 0.117, 0.867]

C. [0.045, 0.910, 0.045]

D. [0.21, 0.58, 0.21]

View Answer

- A 3-input neuron has weights 1, 0.5, and 2. The transfer function is linear, with the constant of proportionality being equal to 2. The inputs are 2, 20, and 4 respectively. The output will be:

A. 40

B. 20

C. 50

D. 10

View Answer

- Which one of the following activation functions is NOT analytically differentiable for all real values of the given input?

A. Sigmoid

B. Tanh

C. ReLU

D. None of the above

View Answer

- Which function does the following perceptron realize? X1 and X2 can take only binary values. h(x) is the activation function. h(x) = 1 if x > 0, else 0. Output = ?

A. NAND

B. NOR

C. AND

D. OR

View Answer

7. What is the size of the weight matrices between hidden output layer and input hidden layer in a simple MLP model with 10 neurons in the input layer, 100 neurons in the hidden layer, and 1 neuron in the output layer?

A. [10×1], [100×2]

B. [100×1], [10×1]

C. [100×10], [10×1]

D. [100×1], [10×100]

View Answer

- Consider a fully connected neural network with input, one hidden layer, and output layer with 40, 2, and 1 nodes respectively in each layer. What is the total number of learnable parameters (no biases)?

A. 2

B. 82

C. 50

D. 40

View Answer

- You want to build a 10-class neural network classifier. Given a cat image, you want to classify which of the 10 cat breeds it belongs to. Which among the 4 options would be an appropriate loss function to use for this task?

A. Cross Entropy Loss

B. MSE Loss

C. SSNV Loss

D. None of the above

View Answer

- You’d like to train a fully-connected neural network with 5 hidden layers, each with 10 hidden units. The input is 20-dimensional and the output is a scalar. What is the total number of trainable parameters in your network? There is no bias.

A. (20 × 1) * 10 +( 10 +1)*10*4+( 10 + 1)*1

B. (20 )* 10 + (10) *10 * 4 +(10) × 1

C. (20) × 10 + (10) × 10 × 5 + (10) × 1)

D. (20 + 1) × 10 + (10 +1) *10 × 5 + (10 + 1)*1

View Answer

Nptel Deep Learning Week 5 Assignment 5 Answers 2023

Course Link: Click Here

Q1. The activation function which is not analytically differentiable for all real values of the given input is

a. Sigmoid

b. Tanh

c. RelU

d. Both a&b

Answer: c. RelU

Q2. What is the main benefit of stacking multiple layers of neuron with non-linear activation functions over a single layer perceptron?

a. Reduces complexity of the network

b. Reduce inference time during testing

c. Allows to create non-linear decision boundaries

d. All of the above

Answer: c. Allows to create non-linear decision boundaries

These are NPTEL Deep Learning Week 5 Assignment 5 Answers

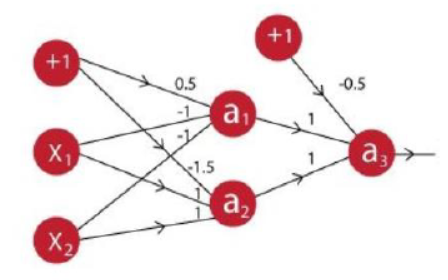

Q3. What will the output from node a3 in the following neural network setup when the inputs are (x1,x2) = (1,1).The activation function used in each of three nodes a,, a, and a; are zero- thresholdingi.e f(x) = 1if x > 0else 0?

a. -1

b. 0

c. 1

d. 0.5

Answer: c. 1

Q4. Suppose a neural network has 3 input 3 nodes, x, y, z. There are 2 neurons, Qand F. Q=4x +y and F = Q * z2. What is the gradient of F with respect to x, y and z? Assume, (x, y, z) = (-2, 5, -4).

a. (64,16, 24)

b. (-24,-4,16)

c. (4,4,-13)

d. (13,13,24)

Answer: a. (64,16, 24)

These are NPTEL Deep Learning Week 5 Assignment 5 Answers

Q5. Which of the following properties, if present in an activation function CANNOT be used in a neural network?

a. The function is periodic

b. The function is monotonic

c. The function is unbounded

d. Bothaandb

Answer: a. The function is periodic

Q6. For a binary classification setting. what if the probability of belonging to class = +1 is 0.67 the probability of belonging to class= -1?

a. 0

b. 033

c. 0.67*0.33

d 1- (067 * 067)

Answer: b. 033

These are NPTEL Deep Learning Week 5 Assignment 5 Answers

Q7. Suppose a fully-connected neural network has a single hidden layer with 10 nodes. The input is represented by a 5D feature vector and the number of classes is 3. Calculate the number of 3 parameters of the network. Consider there are NO bias nodes in the network?

a. 80

b. 75

c. 78

d. 120

Answer: a. 80

These are NPTEL Deep Learning Week 5 Assignment 5 Answers

Q8. For a 2-class classification problem, what is the minimum number of nodes required for the output layer of a multi-layered neural network?

a. 2

b. 1

c. 3

d. None of the above

Answer: b. 1

These are NPTEL Deep Learning Week 5 Assignment 5 Answers

Q9. Suppose the input layer of a fully-connected neural network has 4 nodes. The value of a node in the first hidden layer before applying sigmoid nonlinearity is V. Now, each of the input layer’s nodes are called up by 8 times. What will be the value of that neuron with the updated input layer?

a. 8v

b. 4v

c. 32v

d. Remain same since scaling of input layers does not affect the hidden layers

Answer: a. 8v

These are NPTEL Deep Learning Week 5 Assignment 5 Answers

Q10. Which of the following are potential benefits of using ReLU activation over sigmoid activation?

a. Relu helps in creating dense (most of the neurons are active) representations

b. Relu helps in creating sparse (most of the neurons are non-active) representations

c. Relu helps in mitigating vanishing gradient effect

d. Both (b) and (c)

Answer: d. Both (b) and (c)

These are NPTEL Deep Learning Week 5 Assignment 5 Answers

More weeks of Deep Learning: Click Here

More Nptel Courses: https://progiez.com/nptel