Deep Learning Week 6 Nptel Assignment Answers

Course Name: Deep Learning

Table of Contents

NPTEL Deep Learning Week 6 Assignment 6 Answers (Jan-Apr 2025)

Course Link: Click Here

- Suppose a neural network has 3 input nodes, a, b, c. There are 2 neurons, X and Y. X = a + b and Y = X * c. What is the gradient of Y with respect to a, b, and c? Assume, (a, b, c) = (6, -1, -4).

a) (5, -4, -4)

b) (4, 4, -5)

c) (-4, -4, 5)

d) (3, 3, 4)

- y = max(a, b) and a > b. What is the value of dy/da and dy/db?

a) 1, 0

b) 0, 1

c) 0, 0

d) 1, 1

- PCA reduces the dimension by finding a few __________.

a) Hexagonal linear combination

b) Orthogonal linear combinations

c) Octagonal linear combination

d) Pentagonal linear combination

- Consider the four sample points below, Xi∈R2X_i \in R^2.

We want to represent the data in 1D using PCA. Compute the unit-length principal component directions of X, and then choose from the options below which one the PCA algorithm would choose if you request just one principal component.

a) [1/2,1/2]T[1/\sqrt{2}, 1/\sqrt{2}]^T

b) [1/2,−1/2]T[1/\sqrt{2}, -1/\sqrt{2}]^T

c) [−1/2,1/2]T[-1/\sqrt{2}, 1/\sqrt{2}]^T

d) [1/2,1/2]T[1/\sqrt{2}, 1/\sqrt{2}]^T

- Which of the following is FALSE about PCA and Autoencoders?

a) Both PCA and Autoencoders can be used for dimensionality reduction

b) PCA works well with non-linear data but Autoencoders are best suited for linear data

c) Output of both PCA and Autoencoders is lossy

d) None of the above

- What is true regarding the backpropagation rule?

a) It is a feedback neural network

b) Gradient of the final layer of weights being calculated first and the gradient of the first layer of weights being calculated last

c) Hidden layers are not important, only meant for supporting the input and output layer

d) None of the mentioned

- Which of the following is true for PCA? Tick all the options that are correct.

a) Rotates the axes to lie along the principal components

b) Is calculated from the covariance matrix

c) Removes some information from the data

d) Eigenvectors describe the length of the principal components

- A single hidden and no-bias autoencoder has 100 input neurons and 10 hidden neurons. What will be the number of parameters associated with this autoencoder?

a) 1000

b) 2000

c) 2110

d) 1010

- Which of the following two vectors can form the first two principal components?

a) {2; 3; 1} and {3; 1; −9}

b) {2; 4; 1} and {−2; 1; −8}

c) {2; 3; 1} and {−3; 1; −9}

d) {2; 3; −1} and {3; 1; −9}

- Let’s say vectors a={2,4}a = \{2, 4\} and b={n,1}b = \{n, 1\} form the first two principal components after applying PCA. Under such circumstances, which among the following can be a possible value of n?

a) 2

b) -2

c) 0

d) 1

NPTEL Deep Learning Week 6 Assignment 6 Answers 2023

Course Link: Click Here

Q1. Which of the following is considered for correcting a weight during back propagation?

a. Positive gradient of weight

b. Gradient of error

c. Negative gradient of error w.r.t weight

d. Negative gradient of weight

Answer: c. Negative gradient of error w.r.t weight

Q2. What will happen when learning rate is set to zero?

a. Weight update will be very slow

b. Weights will be zero

c. Weight update will tend to zero but not exactly zero

d. Weights will not be updated

Answer: d. Weights will not be updated

These are NPTEL Deep Learning Week 6 Assignment 6 Answers

Q3. During back-propagation through max pooling with stride the gradients are

a. Evenly distributed

b. Sparse gradients are generated with non-zero gradient at the max response location

c. Differentiated with respect to responses

d. None of the above

Answer: b. Sparse gradients are generated with non-zero gradient at the max response location

Q4. Gradient of sigmoid function is maximum at x=?

a. 0

b. Positive Infinity

c. Negative Infinity

d. 1

Answer: a. 0

These are NPTEL Deep Learning Week 6 Assignment 6 Answers

Q5. The derivative of the loss function with respect to the weights in a deep neural network can be computed as,

a. Sum of derivative of cost function, derivative of non-linear transfer function and derivative of linear network.

b. Product of derivative of cost function and derivative of non-linear transfer function.

c. Product of derivative of cost function, derivative of non-linear transfer function and derivative of linear network.

d. Sum of derivative of cost function and derivative of non-linear transfer function.

Answer: c. Product of derivative of cost function, derivative of non-linear transfer function and derivative of linear network.

Q6. Which of the following models can be employed for unsupervised learning?

a. Autoencoder

b. Restricted Boltzmann machines

c. Bothaandb

d. None

Answer: c. Bothaandb

These are NPTEL Deep Learning Week 6 Assignment 6 Answers

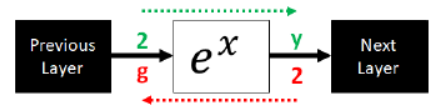

Q7.

Find the gradient component ‘g’ of this function.

a. 2

b. e2

c. 2e2

d. 4

Answer: c. 2e2

These are NPTEL Deep Learning Week 6 Assignment 6 Answers

Q8. What is the similarity between an autoencoder and Principle Component Analysis (PCA)?

a. Both assume nonlinear systems

b. Subspace of weight matrices

c. Both can assume linear systems

d. All of these

Answer: c. Both can assume linear systems

These are NPTEL Deep Learning Week 6 Assignment 6 Answers

Q9. Which of the following is only an unsupervised learning problem?

a. Digit Recognition

b. Image Segmentation

c. Image Compression

d. All of the above

Answer: c. Image Compression

These are NPTEL Deep Learning Week 6 Assignment 6 Answers

Q10. What is the dimension of encoder weight matrix of an autoencoder (hidden units=400) constructed to handle 10-dimensional input samples?

a. rows =10 and columns = 401

b. rows =400 and columns = 10

c. rows =11 and columns = 400

d. rows =400 and columns = 11

Answer: d. rows =400 and columns = 11

These are NPTEL Deep Learning Week 6 Assignment 6 Answers

More weeks of Deep Learning: Click Here

More Nptel Courses: https://progiez.com/nptel