An Introduction to Artificial Intelligence | Week 9

Session: JAN-APR 2024

Course name: An Introduction to Artificial Intelligence

Course Link: Click Here

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 9

Q1. There is a biased coin with probability of heads as θ. Let the prior on this probability be given by the PDF P(θ) = 6θ (1- θ). The coin is flipped 5 times, and it lands up on heads each time. The MAP estimate of θ is (return answer rounded upto 3 decimal places, eg, if the answer is 0.3456, return 0.346)

Answer: 0.625

Q2. How many of the following statements are CORRECT

a. Rejection sampling (before rejection step) samples from the posterior distribution of the Bayes Net given the evidence variables

b. In the limit of infinite sampling, rejection sampling will return consistent posterior estimates

c. In the limit of infinite sampling, likelihood weighting will return consistent posterior estimates

d. Exact Inference in Bayesian networks can be done in Polynomial time in the worst case.

e. MCMC with Gibbs Sampling is guaranteed to converge to the same distribution irrespective of the initial state for all kinds of Bayes Networks

f. Performance of Likelihood weighting degrades in practice when there are many evidence variables that are lower in the Bayes Net

g. Late occurring evidence variables can help guide sample generation in likelihood weighting

Answer: 2

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 9

Q3. What is the P(?1 = 1) + P(?5 = 2), where “?i” indicates “?” in the ith row, after the 1st E-step. (round up to 2 decimal places)

Answer: 0.33

Q4. What is P(X = 1 | B = 1) – P(B = 0 | A = 1) after the 1st M-step? (round up to 3 decimal places)

Answer: 0.654

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 9

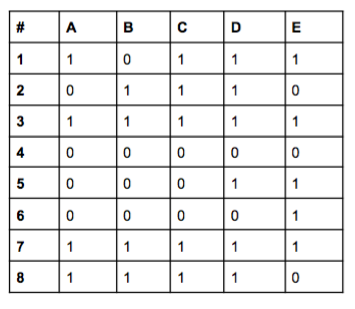

Q5. Consider the bayes-net

We wish to calculate P(c | ¬w, r). Select the correct statements –

When using rejection sampling, we will accept the sample ( ¬c, s, ¬w, r).

When using likelihood weighting, every sample will be weighted 0.5

When using MCMC with Gibbs sampling, we vary assignments to C and S.

S is conditionally independent of all other variables, given its parent C.

Answer: a), d)

Q6. Which of the following is/are INCORRECT?

ML (Maximum Likelihood) works better than MAP when the data is sparse.

In the limit of infinite data, MAP reduces to MLE.

MAP estimation needs a prior distribution on the parameter values

MLE assumes a beta distribution prior on the parameter values

Answer: c), d)

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 9

Q7. How many of the following statements are CORRECT ?

a. The 15-Puzzle has a single agent setup

b. The game of poker has a partially observable environment

c. The game of poker is deterministic

d. The environment of an autonomous driving car is fully observable

e. Playing the game of chess under time constraints is semi-dynamic

f. The outcomes of the decisions taken by an autonomous driving agent are stochastic in nature

g. The game of poker has a discrete environment

h. Interactive Medical Diagnosis chatbots do episodic decision making

Answer: 3

Q8. Consider the following scenario, where we have a bag of candies. There are only two types of candies: red (R) and green (G) in the bag. We want to estimate the % of candies of each type in the bag. We perform Bayesian learning where the hypothesis space is given by H = {h1, h2, h3, h4, h5}. We have the following 5 hypotheses and their initial probabilities (prior).

What is the probability of picking a green candy as predicted by our initial estimates:

Answer: 0.175

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 9

Q9. Consider the same table as question 8, we now pick a random candy from the bag and it turns out to be green. We then recompute the probabilities of our hypothesis using Bayesian learning (posterior):

(values in the table percentages)

What is the value of 5a + 4b + 3c + 2d + e

Answer: 16

Q10. Which of the following is/are CORRECT?

a)To learn the structure of Bayes net, we can perform a local search over the space of network structures.

b) If the structure of Bayes net is known and we are trying to estimate the parameters of the network from data which has some missing values, we can use an EM algorithm to estimate both the missing values and the parameters.

c) Given a training dataset, a fully connected Bayes Net is always guaranteed to fit the dataset the best

d) While learning the Bayes net structure given data, If we use a scoring function which is a linear combination of a term which is inversely proportional to model complexity and a term which is proportional to how well the Bayes net fits the data, then the model with the highest score might not be the fully connected Bayes net

Answer: c), d)

These are An Introduction to Artificial Intelligence Answers Week 9

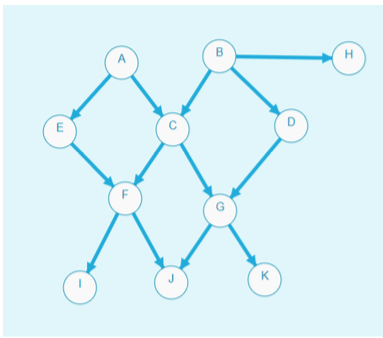

Q11. Consider the following Bayesian Network.

We are given the following samples.

What is the estimated value of P(C=1|B=0) if we use Add-One smoothing? Round off your answer to 2 decimal points. (e.g., if your answer is 0.14159… then write 0.14)

Answer: 0.65

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 9

More Weeks of An Introduction to Artificial Intelligence: Click here

More Nptel Courses: https://progiez.com/nptel-assignment-answers

Course Name: An Introduction to Artificial Intelligence

Course Link: Click Here

These are An Introduction to Artificial Intelligence Answers Week 9

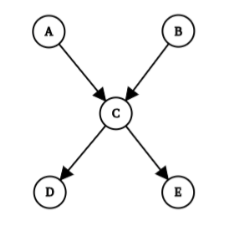

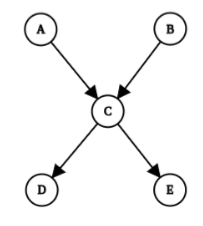

Q1. Consider the following Bayesian network with binary variables.

Calculate the probability P(a | d, e) using rejection sampling, given the following samples. Return the answer as a decimal rounded to 2 decimal points (for example, if it is 0.333, return 0.33).

Answer: 0.75

These are An Introduction to Artificial Intelligence Answers Week 9

Q2. Which of the following statements are true?

a. Rejection sampling samples from the prior distribution

b. Rejection sampling samples from the posterior distribution

c. Likelihood sampling samples from the prior distribution

d. Likelihood sampling samples from the posterior distribution

Answer: a. Rejection sampling samples from the prior distribution

These are An Introduction to Artificial Intelligence Answers Week 9

Q3. Which of the following properties are valid for the environment of the Turing Test?

a. Fully observable

b. Multi-Agent

c. Dynamic

d. Stochastic

Answer: b, c, d

These are An Introduction to Artificial Intelligence Answers Week 9

Q4. Consider the following Bayesian Network. Suppose you are doing likelihood weighting to determine P(s|¬w,c).

What is the weight of the sample (c, s,r, ¬w)?

Return the answer as a decimal rounded to 3 decimal points (for example, if it is 0.1234, return 0.123).

Answer: 0.005

These are An Introduction to Artificial Intelligence Answers Week 9

Q5. Suppose we use MCMC with Gibbs sampling to determine P(s|w) in the above problem. Which of the following are correct statements in this case?

a. We might need to calculate P(w|¬s,c,r) during the sampling process.

b. The relative frequency of reaching the states with S assigned true after sufficiently many steps will provide an estimate of P(s|w)

c. We can get a reliable estimate of probability by using the first few samples only.

d. Sampling using MCMC is asymptotically equivalent to sampling from the prior probability distribution.

Answer: b. The relative frequency of reaching the states with S assigned true after sufficiently many steps will provide an estimate of P(s|w)

These are An Introduction to Artificial Intelligence Answers Week 9

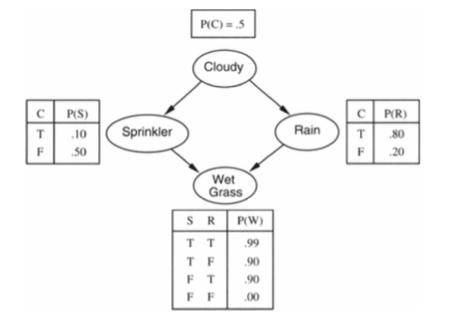

Q6. Consider the following Bayesian Network. What is the Markov Blanket of C? Return the answer as a lexicographically sorted string (for example, if the blanket consists of the nodes A, D and C return ACD)

Answer: ABDEFG

These are An Introduction to Artificial Intelligence Answers Week 9

Q7. Which of the following provides a plausible way to learn the structure of Bayesian networks from data?

a. Bayesian learning

b. Local search in the space of possible structures

c. MAP

d. MLE

Answer: b. Local search in the space of possible structures

These are An Introduction to Artificial Intelligence Answers Week 9

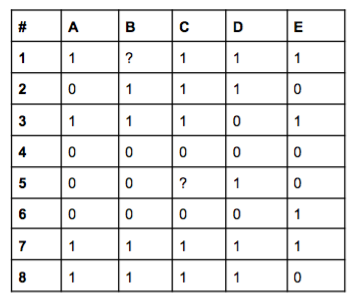

Q8. Consider the following Bayesian Network, where each variable is binary.

We have the following training examples for the above Bayesian net where two examples contain unobserved values (denoted by ?). All of the parameters of the Bayesian network are set at 0.5 initially, except for P(b) and P(c|¬a,¬b), which are initialised to 0.8. What is the value of P(c|a,b) after simulating the second M step of the simple (hard) EM algorithm? If the answer is the fraction m/n where m and n have no common factors, return m+n. (eg. 3 if the answer is 2/4)

Answer: 2

These are An Introduction to Artificial Intelligence Answers Week 9

Q9. Ram is given a possibly biased coin and he is supposed to estimate the probability of it turning heads. Ram tosses the coin 5 times and gets head 3 times. Suppose that Ram uses maximizing likelihood as the learning algorithm for this task. What, according to him, is the probability of getting heads for the coin?

Give the answer rounded off to 1 decimal place

Answer: 0.6

These are An Introduction to Artificial Intelligence Answers Week 9

Q10. Suppose that Ram has a prior that the probability of the coin turning head is one of 0.4 (case 1), 0.5 (case 2), 0.6 (case 3) with probability 1/3 each. Ram tosses the coin once and gets head. What is the posterior probability of case 1 given this observation?

Give the answer rounded off to 2 decimal places.

Answer: 0.27

These are An Introduction to Artificial Intelligence Answers Week 9

More Solutions of An Introduction to Artificial Intelligence: Click Here

More NPTEL Solutions: https://progiez.com/nptel-assignment-answers/