An Introduction to Artificial Intelligence | Week 10

Session: JAN-APR 2024

Course name: An Introduction to Artificial Intelligence

Course Link: Click Here

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 10

Q1. Choose the CORRECT statements:

We will choose decision D if using Maximax criterion.

Given the current reward table, a rational agent would never prefer decision B over C.

If using Maximin criteria, we would take decision A.

If using Equal Likelihood Criterion, we would choose decision B.

If using Minimax Regret, we would choose decision C.

Answer: A, B, C

Q2. Consider the same reward table. Probability of neutral state happening is 0.6, and that of unfavourable happening is 0.1. Which decision would you take, based on expected reward?

Answer: D

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 10

Q3. For what values of ɑ would you take the action D, according to Hurwicz criterion? The values of ɑ lie in the range (p/q, r], where p and q are co-prime natural numbers. Return p+q+r.

Answer: 5

Q4. Consider the same probability distribution as Q2. What is the amount of money that you should pay for perfect information? (Don’t enter any other symbols such as $, just enter the amount)

Answer: 200

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 10

Q5. Choose the INCORRECT statements –

A most optimistic agent will choose the Maximax Criterion.

A realistic agent will choose the Minimax Criterion.

Equal Likelihood is same as Hurwicz criterion with ɑ = 0.5

Consider 2 lotteries. Lottery 1 returns $100 always. Lottery 2 returns $10000 with probability 0.01 and $0 with probability 0.99. Both of them have the same expected value.

Answer: B, C

Q6. Consider the Value Iteration algorithm for finding the optimal value function for each state in an MDP specified by < S , A, T, R, S0, γ >. We terminate when the maximum change of the value function across all states is less than ϵ.

Which of the following is/are TRUE?

The space complexity of the algorithm doesn’t depend on |A|

The number of iterations taken by the algorithm increases as we decrease ϵ

The number of iterations taken by the algorithm increases as we decrease γ

The Time Complexity of a single iteration is O |A|2 |S|

Answer: A, B

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 10

Q7. We are given the following cyclic MDP, with 4 states A, B, C, G (goal state). The transition costs and probabilities are mentioned in the figure. We evaluate the policy π according to which we take the action a0 in A, a1 in B and a2 in C and G is a terminal state . What is the value of 29|Vπ (A)-Vπ (C)| ? Here |x| is the absolute value of x .

The value function of a state is the expected discounted cost paid to reach the goal starting from the state following the policy.

Answer: 5

Q8. Which of the following is/are TRUE in the context of Markov Decision Processes?

In general, Value Iteration converges in fewer iterations compared to Policy Iteration.

Using a discount factor less than 1 ensures that the value functions do not diverge

If the MDP has a fixed point solution, then value iteration is guaranteed to converge in a finite number of iterations for any value of epsilon irrespective of the starting point

In each iteration of policy iteration, value iteration is run as a subroutine, using a fixed policy

Answer: B, C, D

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 10

Q9. Consider a deterministic policy 𝜋 such that 𝜋(s0) = a00, 𝜋(s1) = a1, 𝜋(s2) = a20, 𝜋(s3) = a3, 𝜋(s4) = a41. What will be the value of state s0 under this policy?

Answer: 9

Q10. If we perform value iteration, then what is the value of V2(s2) i.e. the value function of the state s2 after the end of the second iteration? Before the start of the first iteration all non-goal state values are initialised to 0 and the goal state is initialised to +15.

Answer: 9

These are An Introduction to Artificial Intelligence Answers Week 10

Q11. Consider the following Bayesian Network.

We are given the following samples.

What is the estimated value of P(C=1|B=0) if we use Add-One smoothing? Round off your answer to 2 decimal points. (e.g., if your answer is 0.14159… then write 0.14)

Answer:

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 10

More Weeks of An Introduction to Artificial Intelligence: Click here

More Nptel Courses: https://progiez.com/nptel-assignment-answers

Course Name: An Introduction to Artificial Intelligence

Course Link: Click Here

These are An Introduction to Artificial Intelligence Answers Week 10

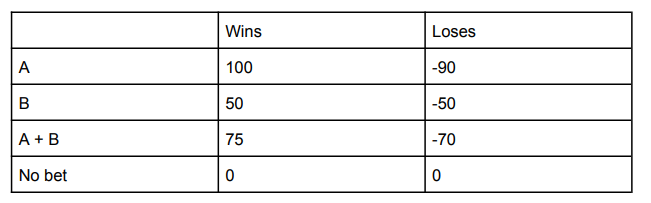

For Question 1 – 3

Ram has the opportunity to make one of 2 bets (say A,B) or invest equally in both bets or make no bets each of which is based on the outcome of a cricket match. The payoffs to Ram on winning/losing each of the bets are as described in the table below:

Q1. If Ram employs minimax regret to decide in this situation, what action does he take?

a. Makes bet A

b. Makes bet B

c. Invest equally in A and B

d. Makes no bet

Answer: b. Makes bet B

These are An Introduction to Artificial Intelligence Answers Week 10

Q2. If Ram employs the Hurwicz criterion to decide, for which of the following values of the coefficient of realism does Ram choose to not make a bet?

a. 0.2

b. 0.5

c. 0.7

d. 0.4

Answer: a, d

These are An Introduction to Artificial Intelligence Answers Week 10

Q3. Assume that an insider tells Ram that he can tell Ram beforehand whether Ram will win or lose a bet. Also assume that all bets have an equal likelihood of success and failure. What is the maximum amount of money Ram should be willing to pay the agent for this information?

Answer: 45

These are An Introduction to Artificial Intelligence Answers Week 10

Q4. For an MDP of discrete finite state space S and discrete finite action space, what is the memory size of the transition function, in the most general case?

a. O(|S|^2)

b. O(|S||A|)

c. O(|S|^2|A|)

d. O(|S||A|^2)

Answer: c. O(|S|^2|A|)

These are An Introduction to Artificial Intelligence Answers Week 10

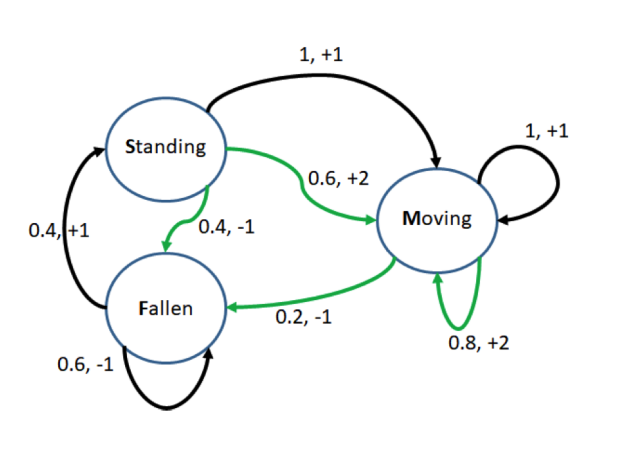

For Question 5 – 7 :

Consider the MDP given below for a robot trying to walk.

The MDP has three states: S={Standing,Moving,Fallen} and two actions: moving the robot legs slowly (a) and moving the robot legs aggressively (b), denoted by the colour black and green respectively. The task is to perform policy iteration for the above MDP with discount factor 1.

Q5. We start with a policy 𝜋(s) = a for all s in S and V 𝜋 (s) = 0 for all s. What is the value of the Fallen state after one iteration of bellman update during policy evaluation?

Answer: -0.2

These are An Introduction to Artificial Intelligence Answers Week 10

Q6. Suppose we perform the policy improvement step just after one iteration of bellman update as in Q5, what is the updated policy. Write in the order of actions for Standing, Moving and Fallen.

Example, if the policy is 𝜋(Standing) = b, 𝜋(Moving) = b, 𝜋(Fallen) = a, write the answer as bba.

Answer: aba

These are An Introduction to Artificial Intelligence Answers Week 10

Q7. After one iteration of policy evaluation as in Q5, what is the value of Q(state,action) where state = Moving and action = b?

Answer: 2.16,2.48

These are An Introduction to Artificial Intelligence Answers Week 10

Q8. If the utility curve of an agent varies as m^2 for money m, then the agent is:

a. Risk-prone

b. Risk-averse

c. Risk-neutral

d. Can be any of these

Answer: a. Risk-prone

These are An Introduction to Artificial Intelligence Answers Week 10

Q9. Which of the following statements are true regarding Markov Decision Processes (MDPs)?

a. Discount factor is not useful for finite horizon MDPs.

b. We assume that the reward and cost models are independent of the previous state transition history, given the current state.

c. MDPs assume full observability of the environment

d. Goal states may have transitions to other states in the MDP

Answer: b, c, d

These are An Introduction to Artificial Intelligence Answers Week 10

Q10. Which of the following are true regarding value and policy iteration?

a. Value iteration is guaranteed to converge in a finite number of steps for any value of epsilon and any MDP, if the MDP has a fixed point.

b. The convergence of policy iteration is dependent on the initial policy.

c. Value iteration is generally expected to converge in a lesser number of iterations as compared to policy iteration.

d. In each iteration of policy iteration, value iteration is run as a subroutine, using a fixed policy

Answer: a, d

These are An Introduction to Artificial Intelligence Answers Week 10

More Solutions of An Introduction to Artificial Intelligence: Click Here

More NPTEL Solutions: https://progiez.com/nptel-assignment-answers/