Introduction To Machine Learning IIT-KGP Nptel Week 5 Assignment Answers

Are you looking for Nptel Introduction To Machine Learning IIT-KGP Week 5 Answer? This guide offers comprehensive assignment solutions tailored to help you master key machine learning concepts such as supervised learning, regression, and classification.

Table of Contents

Introduction To Machine Learning IIT-KGP Week 5 Answers (July-Dec 2025)

Question 1. What would be the ideal complexity of the curve which can be used for separating the two classes shown in the image below?

a) Linear

b) Quadratic

c) Cubic

d) Insufficient data to draw conclusion

Question 2. Which of the following option is true?

a) Linear regression error values have to be normally distributed but not in logistic regression

b) Logistic regression values have to be normally distributed but not in linear regression

c) Both linear and logistic regression error values have to be normally distributed

d) Both linear and logistic regression error values need not be normally distributed

Question 3. Which of the following methods do we use to best fit the data in Logistic Regression?

a) Manhattan distance

b) Maximum Likelihood

c) Jaccard distance

d) Both A and B

Question 4. Given a logistic regression training plot of cost vs. iterations for three learning rates (blue = L₁, red = L₂, green = L₃), which relation is true?

a) L₁ > L₂ > L₃

b) L₁ = L₂ = L₃

c) L₁ < L₂ < L₃

d) None of these

Question 5. State whether True or False. After training an SVM, we can discard all examples which are not support vectors and can still classify new examples.

a) TRUE

b) FALSE

Question 6. Suppose you are dealing with a 3‑class classification problem and you train an SVM using One‑vs‑All. How many times do you train the SVM model?

a) 1

b) 2

c) 3

d) 4

**Question 7. What is/are true about kernel in SVM?

- Kernel function maps low‑dimensional data to high‑dimensional space

- It’s a similarity function**

a) 1

b) 2

c) 1 and 2

d) None of these

Question 8. Suppose you are using an RBF kernel in SVM with a high Gamma value. What does this signify?

a) The model considers even far away points from the hyperplane for modelling.

b) The model considers only the points close to the hyperplane for modelling.

c) The model is not affected by distance of points from the hyperplane.

d) None of the above

Question 9. Below are labelled instances of 2 classes and hand‑drawn decision boundaries for logistic regression. Which figure demonstrates overfitting of the training data?

a) A

b) B

c) C

d) None of these

Question 10. What do you conclude after seeing the visualization in the previous question?

a) C1 and C2

b) C1 and C3

c) C2 and C3

d) C4

Introduction To Machine Learning IIT-KGP Week 5 Answers (July-Dec 2024)

Course Link: Click Here

Q1.What would be the ideal complexity of the curve which can be used for separating the two classes shown in the image below?

A) Linear

B) Quadratic

C) Cubic

D) insufficient data to draw conclusion

Answer: A) Linear

Q2.Suppose you have a dataset with n=10 features and m=1000 examples. After training a logistic regression classifier with gradient descent, you find that it has high training error and does not achieve the desired performance on training and validation sets. Which of

the following might be promising steps to take?

- Use SVM with a non-linear kernel function

- Reduce the number of training examples

- Create or add new polynomial features

A) 1,2

B) 1,3

c)1,2,3

D) None

Answer: B) 1,3

For answers or latest updates join our telegram channel: Click here to join

These are Introduction To Machine Learning IIT-KGP Week 5 Answers

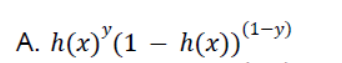

Q3.In logistic regression, we learn the conditional distribution p(y|x), where y is the class label and x is a data point. If h(x) is the output of the logistic regression classifier for an input x, then p(y|x) equals:

A.

B.

C.

D.

Answer A.

Q4.The output of binary class logistic regression lies in the range:

A. [-1.0]

B. [0.1]

C. [-1.2]

D. [1.10]

Answer: B. [0.1]

For answers or latest updates join our telegram channel: Click here to join

These are Introduction To Machine Learning IIT-KGP Week 5 Answers

Q5.State whether True or False.

“After training an SVM, we can discard all examples which are not support

vectors and can still classify new examples.”

A) TRUE

B) FALSE

Answer: A) TRUE

Q6 Suppose you are dealing with a 3-class classification problem and you want to train a SVM model on the data. For that you are using the One-vs-all method. How many times do we need to train our SVM model in such a case?

A) 1

B) 2

c)3

D) 4

Answer: c)3

For answers or latest updates join our telegram channel: Click here to join

These are Introduction To Machine Learning IIT-KGP Week 5 Answers

Q7What is/are true about kernels in SVM?

1.Kernel function can map low dimensional data to high dimensional space

2.It’s a similarity function

A)1

B)2

C)1and2

D) None of these.

Answer: C)1and2

Q8.If g(z) is the sigmoid function, then its derivative with respect to z may be written in term of g(z) as

A) g(z)(g(z)-1)

B) g(2)(1+g(z))

C)-g(2)(1+g(z))

D)g(2)(1-g(z))

Answer: D)g(2)(1-g(z))

For answers or latest updates join our telegram channel: Click here to join

These are Introduction To Machine Learning IIT-KGP Week 5 Answers

Q9.Below are the labelled instances of 2 classes and hand drawn decision boundaries for logistic regression. Which of the following figures demonstrates overfitting of the training data?

A) A

B) B

C) C

D) None of these

Answer: C) C

For answers or latest updates join our telegram channel: Click here to join

These are Introduction To Machine Learning IIT-KGP Week 5 Answers

Q10.What do you conclude after seeing the visualization in the previous question (Question9)?

C1. The training error in the first plot is higher as compared to the second and third plot.

C2. The best model for this regression problem is the last (third) plot because it

has minimum training error (zero).

C3. Out of the 3 models, the second model is expected to perform best on

unseen data.

C4. All will perform similarly because we have not seen the test data.

A)C1and C2

B)C1and C3

C)C2and C3

D)C4

Answer: B)C1and C3

For answers or latest updates join our telegram channel: Click here to join

These are Introduction To Machine Learning IIT-KGP Week 5 Answers

All weeks of Introduction to Machine Learning: Click Here

More Nptel Courses: https://progiez.com/nptel-assignment-answers