Introduction to Machine Learning Nptel Week 3 Answers

Are you looking for Introduction to Machine Learning Nptel Week 3 Answers? You’ve come to the right place! Access the latest and most accurate solutions for your Week 3 assignment in the Introduction to Machine Learning course.

Table of Contents

Introduction to Machine Learning Nptel Week 3 Answers (July-Dec 2025)

Question 1. For a two-class problem using discriminant functions (δₖ – discriminant function for class k), where is the separating hyperplane located?

a) Where δ₁ > δ₂

b) Where δ₁ < δ₂

c) Where δ₁ = δ₂

d) Where δ₁ + δ₂ = 1

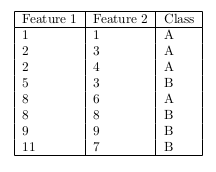

Question 2. Given the following dataset consisting of two classes, A and B, calculate the prior probability of each class. What are the prior probabilities of class A and class B?

a) P(A) = 0.5, P(B) = 0.5

b) P(A) = 0.625, P(B) = 0.375

c) P(A) = 0.375, P(B) = 0.625

d) P(A) = 0.6, P(B) = 0.4

Question 3. In a 3-class classification problem using linear regression, the output vectors for three data points are [0.8, 0.3, -0.1], [0.2, 0.6, 0.2], and [-0.1, 0.4, 0.7]. To which classes would these points be assigned?

a) 1, 2, 1

b) 1, 2, 2

c) 1, 3, 2

d) 1, 2, 3

Question 4. If you have a 5-class classification problem and want to avoid masking using polynomial regression, what is the minimum degree of the polynomial you should use?

a) 3

b) 4

c) 5

d) 6

Question 5. Consider a logistic regression model where the predicted probability for a given data point is 0.4. If the actual label for this data point is 1, what is the contribution of this data point to the log-likelihood?

a) -1.3219

b) -0.9163

c) +1.3219

d) +0.9163

Question 6. What additional assumption does LDA make about the covariance matrix in comparison to the basic assumption of Gaussian class conditional density?

a) The covariance matrix is diagonal

b) The covariance matrix is identity

c) The covariance matrix is the same for all classes

d) The covariance matrix is different for each class

Question 7. What is the shape of the decision boundary in LDA?

a) Quadratic

b) Linear

c) Circular

d) Can not be determined

Question 8. For two classes C₁ and C₂ with within-class variances σ²w₁ = 1 and σ²w₂ = 4 respectively, if the projected means are µ′₁ = 1 and µ′₂ = 3, what is the Fisher criterion J(w)?

a) 0.5

b) 0.8

c) 1.25

d) 1.5

Question 9. Given two classes C₁ and C₂ with means µ₁ = [2 3] and µ₂ = [5 7] respectively, what is the direction vector w for LDA when the within-class covariance matrix Sw is the identity matrix I?

a) [4 3]

b) [5 7]

c) [0.7 0.7]

d) [0.6 0.8]

Introduction to Machine Learning Nptel Week 3 Answers (Jan-Apr 2025)

Course Link: Click Here

1) Which of the following statement(s) about decision boundaries and discriminant functions of classifiers is/are true?

a) In a binary classification problem, all points xx on the decision boundary satisfy δ1(x)=δ2(x)\delta_1(x) = \delta_2(x).

b) In a three-class classification problem, all points on the decision boundary satisfy δ1(x)=δ2(x)=δ3(x)\delta_1(x) = \delta_2(x) = \delta_3(x).

c) In a three-class classification problem, all points on the decision boundary satisfy at least one of δ1(x)=δ2(x)\delta_1(x) = \delta_2(x), δ2(x)=δ3(x)\delta_2(x) = \delta_3(x), δ3(x)=δ1(x)\delta_3(x) = \delta_1(x).

d) If xx does not lie on the decision boundary, then all points lying in a sufficiently small neighborhood around xx belong to the same class.

2) You train an LDA classifier on a dataset with 2 classes. The decision boundary is significantly different from the one obtained by logistic regression. What could be the reason?

a) The underlying data distribution is Gaussian.

b) The two classes have equal covariance matrices.

c) The underlying data distribution is not Gaussian.

d) The two classes have unequal covariance matrices.

3) The following table gives the binary ground truth labels YiY_i for four input points (not given). We have a logistic regression model with some parameter values that computes the probability P1(Xi)P_1(X_i) that the label is 1. Compute the likelihood of observing the data given these model parameters.

| YiY_i | 1 | 0 | 1 | 0 |

|---|---|---|---|---|

| P1(Xi)P_1(X_i) | 0.8 | 0.5 | 0.2 | 0.9 |

a) 0.072

b) 0.144

c) 0.288

d) 0.002

4) Which of the following statement(s) about logistic regression is/are true?

a) It learns a model for the probability distribution of the data points in each class.

b) The output of a linear model is transformed to the range (0,1) by a sigmoid function.

c) The parameters are learned by minimizing the mean-squared loss.

d) The parameters are learned by maximizing the log-likelihood.

5) Consider a modified form of logistic regression given below where kk is a positive constant and β0\beta_0, β1\beta_1 are parameters:

6) Consider a Bayesian classifier for a 5-class classification problem. The following table gives the class-conditioned density fk(x)f_k(x) for class kk at some point xx in the input space.

| kk | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| fk(x)f_k(x) | 0.15 | 0.20 | 0.05 | 0.50 | 0.01 |

Let πk\pi_k denote the prior probability of class kk. Which of the following statement(s) about the predicted label at xx is/are true?

a) The predicted label at xx will always be class 4.

b) If 2πi>πi+12\pi_i > \pi_{i+1} for all i∈{1,2,3,4}i \in \{1, 2, 3, 4\}, the predicted class must be class 4.

c) If π1>π2>π3>π4\pi_1 > \pi_2 > \pi_3 > \pi_4, the predicted class must be class 1.

d) The predicted label at xx can never be class 5.

7) Which of the following statement(s) about a two-class LDA classification model is/are true?

a) On the decision boundary, the prior probabilities corresponding to both classes must be equal.

b) On the decision boundary, the posterior probabilities corresponding to both classes must be equal.

c) On the decision boundary, class-conditioned probability densities corresponding to both classes must be equal.

d) On the decision boundary, the class-conditioned probability densities corresponding to both classes may or may not be equal.

8) Consider the following two datasets and two LDA classifier models trained respectively on these datasets:

- Dataset A: 200 samples of class 0; 50 samples of class 1.

- Dataset B: 200 samples of class 0 (same as Dataset A); 100 samples of class 1 created by repeating twice the class 1 samples from Dataset A.

Let the classifier decision boundary learned be of the form w⋅x+b=0w \cdot x + b = 0, where ww is the slope and bb is the intercept. Which of the given statements is true?

a) The learned decision boundary will be the same for both models.

b) The two models will have the same slope but different intercepts.

c) The two models will have different slopes but the same intercept.

d) The two models may have different slopes and different intercepts.

9) Which of the following statement(s) about LDA is/are true?

a) It minimizes the inter-class variance relative to the intra-class variance.

b) It maximizes the inter-class variance relative to the intra-class variance.

c) Maximizing the Fisher information results in the same direction of the separating hyperplane as the one obtained by equating the posterior probabilities of classes.

d) Maximizing the Fisher information results in a different direction of the separating hyperplane from the one obtained by equating the posterior probabilities of classes.

10) Which of the following statement(s) regarding logistic regression and LDA is/are true for a binary classification problem?

a) For any classification dataset, both algorithms learn the same decision boundary.

b) Adding a few outliers to the dataset is likely to cause a larger change in the decision boundary of LDA compared to that of logistic regression.

c) Adding a few outliers to the dataset is likely to cause a similar change in the decision boundaries of both classifiers.

d) If the intra-class distributions deviate significantly from the Gaussian distribution, logistic regression is likely to perform better than LDA.

Introduction to Machine Learning Nptel Week 3 Answers (July-Dec 2024)

Q1.For a two-class problem using discriminant functions (δ discriminant function for class k

), where is the separating hyperplane located?

Where δ1>δ2

Where δ1<δ2

Where δ1=δ2

Where δ1+δ2=1

Answer: Where δ1=δ2

Q2. Given the following dataset consisting of two classes, A and B ,calculate the prior probability of each class.

What are the prior probabilities of class A and class B ?

P(A)=0.5,P(B)=0.5

P(A)=0.625,P(B)=0.375

P(A)=0.375,P(B)=0.625

P(A)=0.6,P(B)=0.4

Answer: P(A)=0.625,P(B)=0.375

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Nptel Week 3 Answers

Q3.In a 3-class classification problem using linear regression, the output vectors for three data points are [0.8, 0.3, -0.1], [0.2, 0.6, 0.2], and [-0.1, 0.4, 0.7]. To which classes would these points be assigned?

1, 2, 1

1, 2, 2

1, 3, 2

1, 2, 3

Answer: 1, 2, 3

Q4.If you have a 5-class classification problem and want to avoid masking using polynomial regression, what is the minimum degree of the polynomial you should use?

3

4

5

6

Answer: 4

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Nptel Week 3 Answers

Q5. Consider a logistic regression model where the predicted probability for a given data point is 0.4. If the actual label for this data point is 1, what is the contribution of this data point to the log-likelihood?

-1.3219

-0.9163

+1.3219

+0.9163

Answer: -0.9163

Q6.What additional assumption does LDA make about the covariance matrix in comparison to the basic assumption of Gaussian class conditional density?

The covariance matrix is diagonal

The covariance matrix is identity

The covariance matrix is the same for all classes

The covariance matrix is different for each class

Answer: The covariance matrix is the same for all classes

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Nptel Week 3 Answers

Q7.What is the shape of the decision boundary in LDA?

Quadratic

Linear

Circular

Can not be determined

Answer: Linear

Q8.For two classes C1 and C2 with within-class variances σ2w1=1 and σ2w2=4 respectively, if the projected means are µ′1=1 and µ′2=3 , what is the Fisher criterion J(w) ?

0.5

0.8

1.25

1.5

Answer: 0.8

Q9.Given two classes C1 and C2 with means µ1=[23] and µ2=[57] respectively, what is the direction vector w for LDA when the within-class covariance matrix Sw is the identity matrix I ?

[43]

[57]

[0.70.7]

[0.60.8]

Answer: [43]

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Nptel Week 3 Answers

All weeks of Introduction to Machine Learning: Click Here

For answers to additional Nptel courses, please refer to this link: NPTEL Assignment Answers

Introduction to Machine Learning Nptel Week 3 Answers (Jan-Apr 2024)

Course name: Introduction to Machine Learning

Course Link: Click Here

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Nptel Week 3 Answers

Q1. Which of the following statement(s) about decision boundaries and discriminant functions of classifiers is/are true?

In a binary classification problem, all points x on the decision boundary satisfy δ1(x)=δ2(x)

In a three-class classification problem, all points on the decision boundary satisfy δ1(x) = δ2(x) = δ3(x)

In a three-class classification problem, all points on the decision boundary satisfy at least one of δ1(x) = δ2(x), δ2(x) = δ3(x) or δ3(x) = δ1(x).

Let the input space be Rn. If x does not lie on the decision boundary, there exists an ϵ>0 such that all inputs y satisfying ||y−x||<ϵ belong to the same class.

Answer: A, B, D

Q2. The following table gives the binary ground truth labels yi for four input points xi (not given). We have a logistic regression model with some parameter values that computes the probability p(xi) that the label is 1. Compute the likelihood of observing the data given these model parameters.

0.346

0.230

0.058

0.086

Answer: 0.230

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Nptel Week 3 Answers

Q3. Which of the following statement(s) about logistic regression is/are true?

It learns a model for the probability distribution of the data points in each class.

The output of a linear model is transformed to the range (0, 1) by a sigmoid function.

The parameters are learned by optimizing the mean-squared loss.

The loss function is optimized by using an iterative numerical algorithm.

Answer: b, d

Q4. Consider a modified form of logistic regression given below where k is a positive constant and β0 and β1 are parameters.

log(1−p(x)/kp(x))=β0−β1x

Then find p(x).

eβ0/keβ0+eβ1x

eβ1x/eβ0+keβ1x

eβ1x/keβ0+eβ1x

eβ1x/keβ0+e−β1x

Answer: c. eβ1x/keβ0+eβ1x

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Nptel Week 3 Answers

Q5. Consider a Bayesian classifier for a 3-class classification problem. The following tables give the class-conditioned density fk(x) for three classes k=1,2,3 at some point x in the input space.

Note that πk denotes the prior probability of class k. Which of the following statement(s) about the predicted label at x is/are true?

If the three classes have equal priors, the prediction must be class 2

If π3<π2 and π1<π2, the prediction may not necessarily be class 2

If π1>2π2, the prediction could be class 1 or class 3

If π1>π2>π3, the prediction must be class 1

Answer: a, c

Q6. The following table gives the binary labels (y(i)) for four points (x(i)1,x(i)2) where i = 1,2,3,4. Among the given options, which set of parameter values β0,β1,β2 of a standard logistic regression model p(xi)=1/1+e−(β0+β1x+β2x) results in the highest likelihood for this data?

β0=0.5,β1=1.0,β2=2.0

β0=−0.5,β1=−1.0,β2=2.0

β0=0.5,β1=1.0,β2=−2.0

β0=−0.5,β1=1.0,β2=2.0

Answer: c. β0=0.5,β1=1.0,β2=−2.0

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Nptel Week 3 Answers

Q7. Which of the following statement(s) about a two-class LDA model is/are true?

It is assumed that the class-conditioned probability density of each class is a Gaussian

A different covariance matrix is estimated for each class

At a given point on the decision boundary, the class-conditioned probability densities corresponding to both classes must be equal

At a given point on the decision boundary, the class-conditioned probability densities corresponding to both classes may or may not be equal

Answer: A, D

Q8. Consider the following two datasets and two LDA models trained respectively on these datasets.

Dataset A: 100 samples of class 0; 50 samples of class 1

Dataset B: 100 samples of class 0 (same as Dataset A); 100 samples of class 1 created by repeating twice the class 1 samples from Dataset A

The classifier is defined as follows in terms of the decision boundary wTx+b=0. Here, w is called the slope and b is called the intercept.

x={0 if wTx+b<0 1 if wTx+b≥0

Which of the given statement is true?

The learned decision boundary will be the same for both models

The two models will have the same slope but different intercepts

The two models will have different slopes but the same intercept

The two models may have different slopes and different intercepts

Answer: The two models will have the same slope but different intercepts

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Nptel Week 3 Answers

Q9. Which of the following statement(s) about LDA is/are true?

It minimizes the between-class variance relative to the within-class variance

It maximizes the between-class variance relative to the within-class variance

Maximizing the Fisher information results in the same direction of the separating hyperplane as the one obtained by equating the posterior probabilities of classes

Maximizing the Fisher information results in a different direction of the separating hyperplane from the one obtained by equating the posterior probabilities of classes

Answer: b, c

Q10. Which of the following statement(s) regarding logistic regression and LDA is/are true for a binary classification problem?

For any classification dataset, both algorithms learn the same decision boundary

Adding a few outliers to the dataset is likely to cause a larger change in the decision boundary of LDA compared to that of logistic regression

Adding a few outliers to the dataset is likely to cause a similar change in the decision boundaries of both classifiers

If the within-class distributions deviate significantly from the Gaussian distribution, logistic regression is likely to perform better than LDA

Answer: b, c

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Nptel Week 3 Answers

More Weeks of Introduction to Machine Learning: Click here

More Nptel Courses: https://progiez.com/nptel-assignment-answers

Introduction to Machine Learning Nptel Week 3 Answers (July-Dec 2023)

Course Name: Introduction to Machine Learning

Course Link: Click Here

These are Introduction to Machine Learning Nptel Week 3 Answers

Q1. Which of the following are differences between LDA and Logistic Regression?

Logistic Regression is typically suited for binary classification, whereas LDA is directly applicable to multi-class problems

Logistic Regression is robust to outliers whereas LDA is sensitive to outliers

both (a) and (b)

None of these

Answer: both (a) and (b)

Q2. We have two classes in our dataset. The two classes have the same mean but different variance.

LDA can classify them perfectly.

LDA can NOT classify them perfectly.

LDA is not applicable in data with these properties

Insufficient information

Answer: LDA can NOT classify them perfectly.

These are Introduction to Machine Learning Nptel Week 3 Answers

Q3. We have two classes in our dataset. The two classes have the same variance but different mean.

LDA can classify them perfectly.

LDA can NOT classify them perfectly.

LDA is not applicable in data with these properties

Insufficient information

Answer: Insufficient information

These are Introduction to Machine Learning Nptel Week 3 Answers

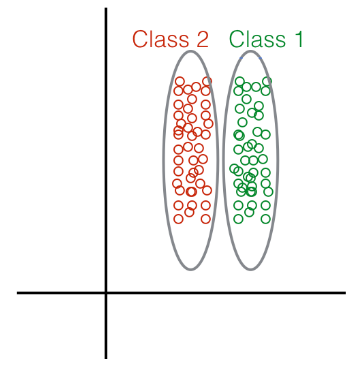

Q4. Given the following distribution of data points:

What method would you choose to perform Dimensionality Reduction?

Linear Discriminant Analysis

Principal Component Analysis

Both LDA and/or PCA.

None of the above.

Answer: Linear Discriminant Analysis

These are Introduction to Machine Learning Nptel Week 3 Answers

Q5. If log(1−p(x)1+p(x))=β0+βx

What is p(x)?

a. p(x)=1+eβ0+βxeβ0+βx

b. p(x)=1+eβ0+βx1−eβ0+βx

c. p(x)=eβ0+βx1+eβ0+βx

d. p(x)=1−eβ0+βx1+eβ0+βx

Answer: d. p(x)=1−eβ0+βx1+eβ0+βx

These are Introduction to Machine Learning Nptel Week 3 Answers

Q6. For the two classes ’+’ and ’-’ shown below.

While performing LDA on it, which line is the most appropriate for projecting data points?

Red

Orange

Blue

Green

Answer: Blue

These are Introduction to Machine Learning Nptel Week 3 Answers

Q7. Which of these techniques do we use to optimise Logistic Regression:

Least Square Error

Maximum Likelihood

(a) or (b) are equally good

(a) and (b) perform very poorly, so we generally avoid using Logistic Regression

None of these

Answer: Maximum Likelihood

These are Introduction to Machine Learning Nptel Week 3 Answers

Q8. LDA assumes that the class data is distributed as:

Poisson

Uniform

Gaussian

LDA makes no such assumption.

Answer: Gaussian

These are Introduction to Machine Learning Nptel Week 3 Answers

Q9. Suppose we have two variables, X and Y (the dependent variable), and we wish to find their relation. An expert tells us that relation between the two has the form Y=meX+c. Suppose the samples of the variables X and Y are available to us. Is it possible to apply linear regression to this data to estimate the values of m and c?

No.

Yes.

Insufficient information.

None of the above.

Answer: Yes.

These are Introduction to Machine Learning Nptel Week 3 Answers

Q10. What might happen to our logistic regression model if the number of features is more than the number of samples in our dataset?

It will remain unaffected

It will not find a hyperplane as the decision boundary

It will over fit

None of the above

Answer: It will over fit

These are Introduction to Machine Learning Nptel Week 3 Answers

More Weeks of INTRODUCTION TO MACHINE LEARNING: Click here

More Nptel Courses: Click here

Introduction to Machine Learning Nptel Week 3 Answers (Jan-Apr 2023)

Course Name: Introduction to Machine Learning

Course Link: Click Here

These are Introduction to Machine Learning Nptel Week 3 Answers

Q1. Which of the following is false about a logistic regression based classifier?

a. The logistic function is non-linear in the weights

b. The logistic function is linear in the weights

c. The decision boundary is non-linear in the weights

d. The decision boundary is linear in the weights

Answer: b, c

Q2. Consider the case where two classes follow Gaussian distribution which are cen- tered at (3, 9) and (−3, 3) and have identity covariance matrix. Which of the following is the separating decision boundary using LDA assuming the priors to be equal?

a. y−x=3

b. x+y=3

c. x+y=6

d. both (b) and (c)

e. None of the above

f. Can not be found from the given information

Answer: c. x+y=6

These are Introduction to Machine Learning Nptel Week 3 Answers

Q3. Consider the following relation between a dependent variable and an independent variable identified by doing simple linear regression. Which among the following relations between the two variables does the graph indicate?

a. as the independent variable increases, so does the dependent variable

b. as the independent variable increases, the dependent variable decreases

c. if an increase in the value of the dependent variable is observed, then the independent variable will show a corresponding increase

d. if an increase in the value of the dependent variable is observed, then the independent variable will show a corresponding decrease

e. the dependent variable in this graph does not actually depend on the independent variable

f. none of the above

Answer: e. the dependent variable in this graph does not actually depend on the independent variable

Q4. Given the following distribution of data points:

What method would you choose to perform Dimensionality Reduction?

a. Linear Discriminant Analysis

b. Principal Component Analysis

Answer: a. Linear Discriminant Analysis

These are Introduction to Machine Learning Nptel Week 3 Answers

Q5. In general, which of the following classification methods is the most resistant to gross outliers?

a. Quadratic Discriminant Analysis (QDA)

b. Linear Regression

c. Logistic regression

d. Linear Discriminant Analysis (LDA)

Answer: c. Logistic regression

These are Introduction to Machine Learning Nptel Week 3 Answers

Q6. Suppose that we have two variables, X and Y (the dependent variable). We wish to find the relation between them. An expert tells us that relation between the two has the form Y=m+X2+c. Available to us are samples of the variables X and Y. Is it possible to apply linear regression to this data to estimate the values of m and c?

a. no

b. yes

c. insufficient information

Answer: a. no

These are Introduction to Machine Learning Nptel Week 3 Answers

Q7. In a binary classification scenario where x is the independent variable and y is the dependent variable, logistic regression assumes that the conditional distribution y|x follows a

a. Bernoulli distribution

b. binomial distribution

c. normal distribution

d. exponential distribution

Answer: a. Bernoulli distribution

These are Introduction to Machine Learning Nptel Week 3 Answers

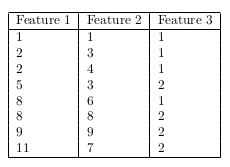

Q8. Consider the following data:

Assuming that you apply LDA to this data, what is the estimated covariance matrix?

a. [1.8750.31250.31250.9375]

b. [2.50.41670.41671.25]

c. [1.8750.31250.31251.2188]

d. [2.50.41670.41671.625]

e. [3.251.16671.16672.375]

f. [2.43750.8750.8751.7812]

g. None of these

Answer: g. None of these

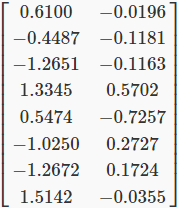

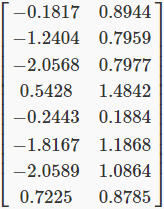

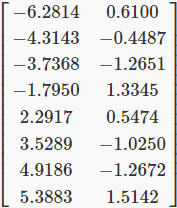

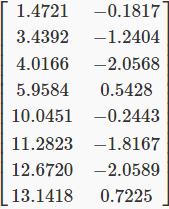

Q9. Given the following 3D input data, identify the principal component.

(Steps: center the data, calculate the sample covariance matrix, calculate the eigenvectors and eigenvalues, identify the principal component)

a. [−0.1022 0.0018 0.9948]

b. [0.5742 −0.8164 0.0605]

c. [0.5742 0.8164 0.0605]

d. [−0.5742 0.8164 0.0605]

e. [0.8123 0.5774 0.0824]

f. None of the above

Answer: e. [0.8123 0.5774 0.0824]

Q10. For the data given in the previous question, find the transformed input along the first two principal components.

a.

b.

c.

d.

e. None of the above

Answer: c

These are Introduction to Machine Learning Nptel Week 3 Answers

More Weeks of Introduction to Machine Learning: Click Here

More Nptel courses: https://progiez.com/nptel

Introduction to Machine Learning Nptel Week 3 Answers (Jul-Dec 2022)

These are Introduction to Machine Learning Nptel Week 3 Answers

Course Name: INTRODUCTION TO MACHINE LEARNING

Link to Enroll: Click Here

Q1. For linear classification we use:

a. A linear function to separate the classes.

b. A linear function to model the data.

c. A linear loss.

d. Non-linear function to fit the data.

Answer: b. A linear function to model the data.

Q2. Logit transformation for Pr(X=1) for given data is S=[0,1,1,0,1,0,1]

a. 3/4

b. 4/3

c. 4/7

d. 3/7

Answer: c. 4/7

These are Introduction to Machine Learning Nptel Week 3 Answers

Q3. The output of binary class logistic regression lies in this range.

a. [−∞,∞]

b. [−1,1]

c. [0,1]

d. [−∞,0]

Answer: d. [−∞,0]

These are Introduction to Machine Learning Nptel Week 3 Answers

Q4. If log(1−p(x)1+p(x))=β0+βxlog What is p(x)p(x)?

Answer: d.

Q5. Logistic regression is robust to outliers. Why?

a. The squashing of output values between [0, 1] dampens the affect of outliers.

b. Linear models are robust to outliers.

c. The parameters in logistic regression tend to take small values due to the nature of the problem setting and hence outliers get translated to the same range as other samples.

d. The given statement is false.

Answer: d. The given statement is false.

These are Introduction to Machine Learning Nptel Week 3 Answers

Q6. Aim of LDA is (multiple options may apply)

a. Minimize intra-class variability.

b. Maximize intra-class variability.

c. Minimize the distance between the mean of classes

d. Maximize the distance between the mean of classes

Answer: b. Maximize intra-class variability.

Q7. We have two classes in our dataset with mean 0 and 1, and variance 2 and 3.

a. LDA may be able to classify them perfectly.

b. LDA will definitely be able to classify them perfectly.

c. LDA will definitely NOT be able to classify them perfectly.

d. None of the above.

Answer: a. LDA may be able to classify them perfectly.

These are Introduction to Machine Learning Nptel Week 3 Answers

Q8. We have two classes in our dataset with mean 0 and 5, and variance 1 and 2.

a. LDA may be able to classify them perfectly.

b. LDA will definitely be able to classify them perfectly.

c. LDA will definitely NOT be able to classify them perfectly.

d. None of the above.

Answer: b. LDA will definitely be able to classify them perfectly.

Q9. For the two classes ’+’ and ’-’ shown below.

While performing LDA on it, which line is the most appropriate for projecting data points?

a. Red

b. Orange

c. Blue

d. Green

Answer: b. Orange

These are Introduction to Machine Learning Nptel Week 3 Answers

Q10. LDA assumes that the class data is distributed as:

a. Poisson

b. Uniform

c. Gaussian

d. LDA makes no such assumption.

Answer: d. LDA makes no such assumption.

These are Introduction to Machine Learning Nptel Week 3 Answers