An Introduction to Artificial Intelligence | Week 12

Session: JAN-APR 2024

Course name: An Introduction to Artificial Intelligence

Course Link: Click Here

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 12

Q1.Choose the CORRECT statement(s) –

According to the Universality Theorem, we need at least 2 hidden layers to represent any continuous function to arbitrary closeness.

With large training datasets, SGD converges faster compared to Batch Gradient Descent

tanh is simply a scaled linear transformation of the sigmoid function.

A 2-layer perceptron with a step activation function can represent XOR.

Answer: b, c

For Question 2 to 4 –

Consider a simple output function y = 𝝈(w1 x1 + w2 x2 + b), where 𝝈 is the sigmoid function and x1, x2 are the inputs. We wish to learn the weights w1, w2, and the bias b. The loss function is defined as L =(ŷ – y)3, where ŷ is the true label (0 or 1). Take x1=1.75, x2 = 2.5, y = 0.8, and ŷ = 1.

Q2. What is the value of ∂L/∂y?

Answer: 0.12

Q3. What is the value of ∂L/∂w1 ?

Answer: 0.0216

Q4. Now, suppose that we have a 2 x 3 matrix M, and we use 2×2 average pooling with stride 1 to get x = [x1, x2]. What is ∂L/∂M12 ? (M12 is the element of M in the 1st row and 2nd column). Assume w1 = 0.5 and w2 = 1.

Answer: 0.04

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 12

Q5. Suppose we use a neural network to classify images of handwritten digits (0-9). Which of the following activation functions is most suitable at the output layer?

Softmax

ReLU

Tanh

Sigmoid

Answer: Softmax

Q6. Consider the following perceptron unit which takes two boolean (0/1) variables x and y as input and computes the boolean variable z according to the following function. Here w1 is the weight corresponding to x and w2 is the weight corresponding to y, T is the threshold of the perception.

Which of the following statement(s) is/are TRUE ?

If w1 = 1, w2 = 1 and T = 1.5, then z = x ∧ y

If w1 = 1, w2 = 1 and T = 0.5 then z = x ∨ y

If w1 = 0, w2=-1 and T = 0.5 then z = ¬ y

If w1 = -1, w2 = 1 and T = 0.5 then z = x →y

Answer: a, b, c

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 12

Q7. How many times does the size of the input decrease (i.e. what is the value of hbd/h’b’d’ where the initial input is of size h x b x d and the output is of size h’ x b ’x d’) after passing through a 3×3 MaxPool layer with stride 3? Size of the input is defined as height x breadth x depth.

(Round your answer off to the closest integer)

Answer: 9

Q8. Which of the following statement(s) is(are) TRUE about DQNs for Atari games?

They learn to play the games by watching videos of expert human players

The DQN only has access to the video frames on the game screen and the reward function. It cannot directly observe the mechanics of the game simulator.

They learn to play the games by random exploration

The DQN only considers one video frame of the game at a time when playing the game

Answer: b, c

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 12

Q9. Which of the following is/are TRUE regarding Neural Networks ?

Deep Neural Networks do not require humans experts to do feature engineering and can learn features on their own using training data

Deep Neural Networks work well in settings when training data is scarce

For the same number of parameters, in practice, neural networks that are tall + thin do better than networks that are fat + short.

There is a certain class of continuous functions that can only be computed by tall + thin neural networks and not fat + short ones no matter how many neurons are available

Answer: a, c

Q10. Which of the following is/are TRUE ?

Deep Neural Networks can learn to enhance the bias present in their training data

Deep Neural Networks are inspired by human brains and hence learn human interpretable functions

Deep Neural Networks are susceptible to adversarial attacks

Deep Neural Networks are robust to all kinds of noises in input instances

Answer: a, d

For answers or latest updates join our telegram channel: Click here to join

These are An Introduction to Artificial Intelligence Answers Week 12

More Weeks of An Introduction to Artificial Intelligence: Click here

More Nptel Courses: https://progiez.com/nptel-assignment-answers

Course Name: An Introduction to Artificial Intelligence

Course Link: Click Here

These are An Introduction to Artificial Intelligence Answers Week 12

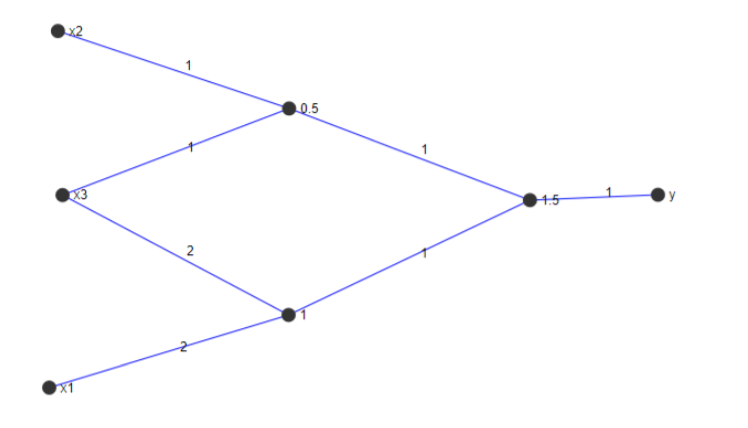

Q1. Consider the following perceptron network. Number on edge represents weight of corresponding input and number beside node represents threshold for that node. x1, x2 and x3 are Boolean variables. What is the representation of y in terms of the inputs?

a. (x1Vx2Vx3)

b. (x1 -> ¬x2) -> x3

c. (x1->x2)->x3

d. (x1^x2^x3)

Answer: b. (x1 -> ¬x2) -> x3

Q2. Which of the following are correct regarding the various non-linear activation functions?

a. For sigmoid activation, strongest signal will flow to the weights of a neuron when the activation is near 0 or 1.

b. Tanh activation is a scaled transformation of the sigmoid activation.

c. ReLU activation can output a larger range of values than sigmoid activation

d. ReLU activation can accelerate the convergence of Stochastic Gradient Descent

Answer: b, c, d

These are An Introduction to Artificial Intelligence Answers Week 12

Q3. Assume that an input image of size 64 x 64 is passed through a convolution filter of size 6 x 6 and stride 2 followed by a max pooling filter of size 2 x 2 and stride 2. If the size of the resulting image is d x d, what is the value of d?

Answer: 15

Q4. Which of the following statements are correct?

a. As long as a neural network has at least one hidden layer and sufficient number of parameters, it can represent any continuous function to arbitrary accuracy.

b. If we are given a fixed number of parameters, the optimal network in terms of learnability will have one neuron per hidden layer and |number of parameters| hidden layers.

c. Fat and short networks make better use of the compositionality of the classification task as compared to tall and thin networks.

d. Fat and short networks may struggle more when some of the desired output classes are under-represented in the training data.

Answer: a, d

These are An Introduction to Artificial Intelligence Answers Week 12

Q5. Which of the following are correct regarding some of the issues faced by AI systems?

a. The robustness issue discussed in class characterises the tendency of the system to give completely incorrect outputs when tiny adversarial perturbations are applied to the weights of the network

b. The transparency issue discussed in class characterises the opaqueness of the system regarding why a particular network configuration fails or succeeds at a particular task

c. The bias issue discussed in class characterises the tendency of the system to amplify racial or gender bias present in the training data

d. The privacy issue discussed in class characterises the ability of a system to infer private information from the training data provided to it.

Answer: b, c, d

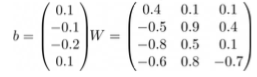

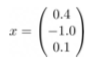

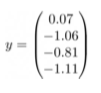

Q6. Consider one layer of a neural network with the weight and bias matrix as

Suppose the activation function used is ReLU. What will be the output for the input x?

a.

b.

c.

d.

Answer: b

These are An Introduction to Artificial Intelligence Answers Week 12

Q7. Which of the following are correct for CNNs, the popular deep learning architectures for image recognition?

a. Convolutional neural networks consist of hand-designed convolutional filters along with fully connected neural networks layers.

b. The output of CNNs is invariant to minor translational shifts in input.

c. The first major success of deep learning in computer vision was based upon the performance of CNNs on the ImageNet challenge.

d. The layer before the softmax/sigmoid in CNNs for image recognition is usually a fully connected neural network layer.

Answer: b, c, d

Q8. Which of the following is the reason for employing replay buffers in the training of deep Q networks?

a. Sparsity in rewards during the episode.

b. Violation of the i.i.d assumption between consecutive samples of an episode.

c. Extremely large state space.

d. Extremely large action space.

Answer: b. Violation of the i.i.d assumption between consecutive samples of an episode.

These are An Introduction to Artificial Intelligence Answers Week 12

Q9. If g(z) is the sigmoid function, which of the following is the correct expression for its derivative with respect to z?

a. g(z)

b. 1-g(z)

c. g(z) * (1 – g(z))

d. g(z) / (1 – g(z))

Answer: c. g(z) * (1 – g(z))

These are An Introduction to Artificial Intelligence Answers Week 12

Q10. Consider a neural network that performs the following operations:

z = Wx + b

y = tanh(z),

J=Σi yi2

number of training examples. For this problem, assume that there is only one training example.

Which of the represents the derivative 𝜕J / 𝜕b ?

a. y^2

b. 2*(1-tanh^2(z))

c. 2tanh(z)*(1-tanh^2(z))

d. tanh(z)*(1-tanh^2(z))

Answer: c. 2tanh(z)*(1-tanh^2(z))

These are An Introduction to Artificial Intelligence Answers Week 12

These are An Introduction to Artificial Intelligence Answers Week 12

More Solutions of An Introduction to Artificial Intelligence: Click Here

More NPTEL Solutions: https://progiez.com/nptel-assignment-answers/