Deep Learning IIT Ropar Week 8 Nptel Assignment Answers

Are you looking for the Deep Learning IIT Ropar Week 8 NPTEL Assignment Answers 2025 (jan-Apr)? You’ve come to the right place! Access the most accurate and up-to-date solutions for your Week 8 assignment in the Deep Learning course offered by IIT Ropar.

Course Link: Click Here

Table of Contents

Deep Learning IIT Ropar Week 8 Nptel Assignment Answers (Jan-Apr 2025)

1. What are the challenges associated with using the Tanh(x) activation function?

a. It is not zero centered

b. Computationally expensive

c. Non-differentiable at 0

d. Saturation

2. Which of the following problems makes training a neural network harder while using sigmoid as the activation function?

a. Not-continuous at 0

b. Not-differentiable at 0

c. Saturation

d. Computationally expensive

3. Consider the Exponential ReLU (ELU) activation function, defined as:

f(x)={x,a(ex−1),x>0;x≤0}f(x) = \{x, a(e^x -1), x > 0; x \leq 0\}

where a≠0a \neq 0. Which of the following statements is true?

a. The function is discontinuous at x=0

b. The function is non-differentiable at x=0

c. Exponential ReLU can produce negative values

d. Exponential ReLU is computationally less expensive than ReLU

4. We have observed that the sigmoid neuron has become saturated. What might be the possible output values at this neuron?

a. 0.0666

b. 0.589

c. 0.9734

d. 0.498

e. 1

5. What is the gradient of the sigmoid function at saturation?

6. Which of the following are common issues caused by saturating neurons in deep networks?

a. Vanishing gradients

b. Slow convergence during training

c. Overfitting

d. Increased model complexity

7. Given a neuron initialized with weights w1=0.9w_1 = 0.9, w2=1.7w_2 = 1.7, and inputs x1=0.4x_1 = 0.4, x2=−0.7x_2 = -0.7, calculate the output of a ReLU neuron.

8. Which of the following is incorrect with respect to the batch normalization process in neural networks?

a. We normalize the output produced at each layer before feeding it into the next layer

b. Batch normalization leads to a better initialization of weights

c. Backpropagation can be used after batch normalization

d. Variance and mean are not learnable parameters

9. Which of the following is an advantage of unsupervised pre-training in deep learning?

a. It helps in reducing overfitting

b. Pre-trained models converge faster

c. It requires fewer computational resources

d. It improves the accuracy of the model

10. How can you tell if your network is suffering from the Dead ReLU problem?

a. The loss function is not decreasing during training

b. A large number of neurons have zero output

c. The accuracy of the network is not improving

d. The network is overfitting to the training data

Deep Learning IIT Ropar Week 8 Nptel Assignment Answers (July-Dec 2024)

- Which of the following activation functions is not zero-centered?

A) Sigmoid

B) Tanh

C) ReLU

D) Softmax

Answer: C) ReLU

- What is the gradient of the sigmoid function at saturation?

Answer: 0

- Given a neuron initialized with weights w1=1.5, w2=0.5, and inputs x1=0.2, x2=−0.5, calculate the output of a ReLU neuron.

Answer: 0.05

- How does pre-training prevent overfitting in deep networks?

A) It adds regularization

B) It initializes the weights near local minima

C) It constrains the weights to a certain region

D) It eliminates the need for fine-tuning

Answer: D) It eliminates the need for fine-tuning

- We train a feed-forward neural network and notice that all the weights for a particular neuron are equal. What could be the possible causes of this issue?

A) Weights were initialized randomly

B) Weights were initialized to high values

C) Weights were initialized to equal values

D) Weights were initialized to zero

Answer: A) Weights were initialized randomly

C) Weights were initialized to equal values

- Which of the following best describes the concept of saturation in deep learning?

A) When the activation function output approaches either 0 or 1 and the gradient is close to zero.

B) When the activation function output is very small and the gradient is close to zero.

C) When the activation function output is very large and the gradient is close to zero.

D) None of the above.

Answer: A) When the activation function output approaches either 0 or 1 and the gradient is close to zero.

These are Deep Learning IIT Ropar Week 8 Nptel Assignment Answers

- Which of the following is true about the role of unsupervised pre-training in deep learning?

A) It is used to replace the need for labeled data

B) It is used to initialize the weights of a deep neural network

C) It is used to fine-tune a pre-trained model

D) It is only useful for small datasets

Answer: B) It is used to initialize the weights of a deep neural network

- Which of the following is an advantage of unsupervised pre-training in deep learning?

A) It helps in reducing overfitting

B) Pre-trained models converge faster

C) It improves the accuracy of the model

D) It requires fewer computational resources

Answer: B) Pre-trained models converge faster

- What is the main cause of the Dead ReLU problem in deep learning?

A) High variance

B) High negative bias

C) Overfitting

D) Underfitting

Answer: B) High negative bias

- What is the main cause of the symmetry breaking problem in deep learning?

A) High variance

B) High bias

C) Overfitting

D) Equal initialization of weights

Answer: D) Equal initialization of weights

These are Deep Learning IIT Ropar Week 8 Nptel Assignment Answers

Check here all Deep Learning IIT Ropar Nptel Assignment Answers : Click here

For answers to additional Nptel courses, please refer to this link: NPTEL Assignment Answers

Deep Learning Week 8 Nptel Assignment Answers (Apr-Jun 2023)

Q1. Which of the following functions can be used as an activation function in the output layer if we

wish to predict the probabilities of n classes such that the sum of p over all n equals to 1?

a. Softmax

b. RelU

c. Sigmoid

d. Tanh

Answer: a. Softmax

Q2. The input image has been converted into a matrix of size 256 X 256 and a kernel/filter of size 5×5 with a stride of 1 and no padding. What will be the size of the convoluted matrix?

a. 252×252

b. 3×3

c 254×254

d. 256×256

Answer: a. 252×252

These are NPTEL Deep Learning Week 8 Assignment Answers

Q3. What will be the range of output if we apply ReLU non-linearity and then Sigmoid Nonlinearity subsequently after a convolution layer?

a. [1,1]

b. [0,1]

c. [0.5,1]

d. [1,-0.5]

Answer: c. [0.5,1]

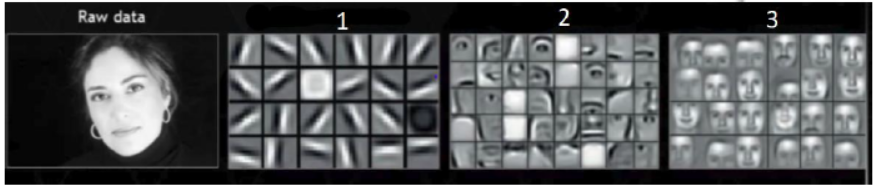

Q4. The figure below shows image of a face which is input to a convolutional neural net and the other three images shows different levels of features extracted from the network. Can you identify from the following options which one is correct?

a. Label 3: Low-level features, Label 2: High-level features, Label 1: Mid-level features

b. Label 1: Low-level features, Label 3: High-level features, Label 2: Mid-level features

c. Label 2: Low-level features, Label 1: High-level features, Label 3: Mid-level features

d. Label 3: Low-level features, Label 1: High-level features, Label 2: Mid-level features

Answer: b. Label 1: Low-level features, Label 3: High-level features, Label 2: Mid-level features

These are NPTEL Deep Learning Week 8 Assignment Answers

Q5. Suppose you have 8 convolutional kernel of size 5 x 5 with no padding and stride 1 in the first layer of a convolutional neural network. You pass an input of dimension 228 x 228 x 3 through athis layer. What are the dimensions of the data which the next layer will receive?

a. 224x224x3

b. 224x224x8

c. 226x226x8

d. 225x225x3

Answer: b. 224x224x8

Q6. What is the mathematical form of the Leaky RelU layer?

a. f(x)=max(0,x)

b. f(x)=min(0,x)

c. f(x)=min(0, ax), where a is a small constant

d. f(x)=1(x<0)(ax)+1(x>=0)(x), where a is a small constant

Answer: d. f(x)=1(x<0)(ax)+1(x>=0)(x), where a is a small constant

These are NPTEL Deep Learning Week 8 Assignment Answers

Q7. The input image has been converted into a matrix of size 224 x 224 and convolved with a kernel/filter of size FxF with a stride of s and padding P to produce a feature map of dimension 222×222. Which among the following is true?

a. F=3×3,s=1,P=1

b. F=3×3,s=0, P=1

c. F=3×3,s=1,P=0

d. F=2×2,s=0, P=0

Answer: c. F=3×3,s=1,P=0

These are NPTEL Deep Learning Week 8 Assignment Answers

Q8. Statement 1: For a transfer learning task, lower layers are more generally transferred to another task

Statement 2: For a transfer learning task, last few layers are more generally transferred to another task

Which of the following option is correct?

a. Statement 1 is correct and Statement 2 is incorrect

b. Statement 1 is incorrect and Statement 2 is correct

c. Both Statement 1 and Statement 2 are correct

d. Both Statement 1 and Statement 2 are incorrect

Answer: a. Statement 1 is correct and Statement 2 is incorrect

These are NPTEL Deep Learning Week 8 Assignment Answers

Q9. Statement 1: Adding more hidden layers will solve the vanishing gradient problem for a 2-layer neural network

Statement 2: Making the network deeper will increase the chance of vanishing gradients.

a. Statement 1 is correct

b. Statement 2 is correct

c. Neither Statement 1 nor Statement 2 is correct

d. Vanishing gradient problem is independent of number of hidden layers of the neural network.

Answer: b. Statement 2 is correct

These are NPTEL Deep Learning Week 8 Assignment Answers

Q10. How many convolution layers are there in a LeNet-5 architecture?

a. 2

b. 3

c 4

d. 5

Answer: a. 2

These are NPTEL Deep Learning Week 8 Assignment Answers

More weeks of Deep Learning: Click Here

More Nptel Courses: https://progiez.com/nptel