Introduction to Machine Learning | Week 10

Session: JAN-APR 2024

Course name: Introduction to Machine Learning

Course Link: Click Here

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

Q1. K-means algorithm is not a particularly sophisticated approach for Image Segmentation tasks. Choose the best possible explanation from below which supports the claim:

a) It takes no account of the spatial proximity of different pixels.

b) The curse of dimensionality does not affect the performance of K-means algorithm, as it effectively handles high-dimensional data with minimal loss of accuracy.

c) The algorithm requires the number of clusters (K) to be specified beforehand.

d) Initialization does not affect K-means.

Answer: a), c)

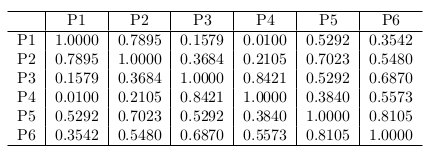

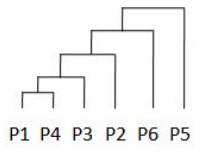

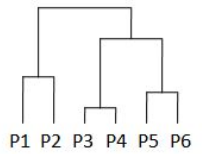

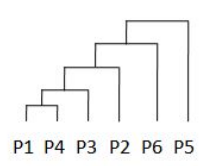

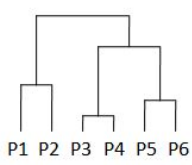

Q2. The pairwise distance between 6 points is given below. Which of the option shows the hierarchy of clusters created by single link clustering algorithm?

a)

b)

c)

d)

Answer: d)

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

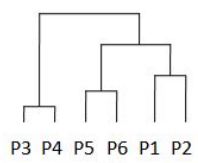

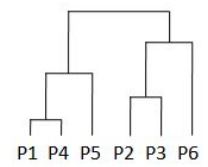

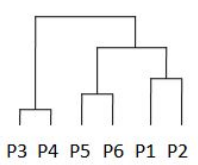

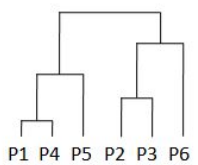

Q3. For the pairwise distance matrix given in the previous question, which of the following shows the hierarchy of clusters created by the complete link clustering algorithm.

a)

b)

c)

d)

Answer: d)

Q4. In BIRCH, using number of points N, sum of points SUM and sum of squared points SS, we can determine the centroid and radius of the combination of any two clusters A and B. How do you determine the radius of the combined cluster? (In terms of N,SUM and SS of both two clusters A and B) Radius of a cluster is given by:

Radius of a cluster is given by:

Radius = √SSN−(SUMN)2

Note: We use the following definition of radius from the BIRCH paper: ”Radius is the average distance from the member points to the centroid.”

a) Radius = √SSANA−(SUMANA)2+SSBNB−(SUMBNB)2

b) Radius = √SSANA−(SUMANA)2+√SSBNB−(SUMBNB)2

c) Radius = √SSA+SSBNA+NB−(SUMA+SUMBNA+NB)2

d) Radius = √SSANA+SSBNB−(SUMA+SUMBNA+NB)2

Answer: c) Radius = √SSA+SSBNA+NB−(SUMA+SUMBNA+NB)2

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

Q5. Statement 1: CURE is robust to outliers.

Statement 2: Because of multiplicative shrinkage, the effect of outliers is dampened.

Statement 1 is true. Statement 2 is true. Statement 2 is the correct reason for statemnet 1.

Statement 1 is true. Statement 2 is true. Statement 2 is not the correct reason for statemnet 1.

Statement 1 is true. Statement 2 is false.

Both statements are false.

Answer: Statement 1 is true. Statement 2 is true. Statement 2 is the correct reason for statemnet 1.

Q6. Which of the following statements about the Rand Index is true?

It is insensitive to the permutations of cluster labels

It is biased towards larger clusters

It cannot handle overlapping clusters

It is unaffected by outliers in the data

Answer: It is insensitive to the permutations of cluster labels

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

Q7. For Rand-index: RI = a+b(n2). ‘a’ in rand-index can be viewed as true positives (pair of points belonging to the same cluster) and ‘b’ as true negatives (pair of points belonging to different clusters). How are rand-index and accuracy related?

rand-index = accuracy

rand-index = 1.01×accuracy

rand-index = accuracy/2

None of the above

Answer: None of the above

Q8. Run BIRCH on the input features of iris dataset using Birch(n_clusters=5, threshold=2). What is the rand-index obtained?

0.68

0.71

0.88

0.98

Answer: 0.88

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

Q9. Run PCA on Iris dataset input features with n components = 2. Now run DBSCAN using DBSCAN(eps=0.5, min samples=5) on both the original features and the PCA features. What are their respective number of outliers/noisy points detected by DBSCAN?

As an extra, you can plot the PCA features on a 2D plot using matplotlib.pyplot.scatter with parameter c = y pred (where y pred is the cluster prediction) to visualise the clusters and outliers.

10, 10

17, 7

21, 11

5, 10

Answer: 21, 11

For answers or latest updates join our telegram channel: Click here to join

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

More Weeks of Introduction to Machine Learning: Click here

More Nptel Courses: https://progiez.com/nptel-assignment-answers

Session: JULY-DEC 2023

Course Name: Introduction to Machine Learning

Course Link: Click Here

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

Q1. The pairwise distance between 6 points is given below. Which of the option shows the hierarchy of clusters created by single link clustering algorithm?

Answer: (b)

Q2. For the pairwise distance matrix given in the previous question, which of the following shows the hierarchy of clusters created by the complete link clustering algorithm.

Answer: (b)

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

Q3. In BIRCH, using number of points N, sum of points SUM and sum of squared points SS, we can determine the centroid and radius of the combination of any two clusters A and B. How do you determine the radius of the combined cluster? (In terms of N, SUM and SS of both two clusters A and B)

Radius of a cluster is given by:

Radius=√SSN−(SUMN)2

Note: We use the following definition of radius from the BIRCH paper:

“Radius is the average distance from the member points to the centroid.”

Radius=√SSANA−(SUMANA)2+SSBNB−(SUMBNB)2

Radius=√SSANA−(SUMANA)2+SSBNB−(SUMBNB)2

Radius=√SSA+SSBNA+NB−(SUMA+SUMBNA+NB)2

Radius=√SSANA+SSBNB−(SUMA+SUMBNA+NB)2

Answer: C. Radius=√SSA+SSB/NA+NB−(SUMA+SUMB/NA+NB)^2

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

Q4. Run K-means on the input features of the MNIST dataset using the following initialization:

KMeans(nclusters=10,randomstate=seed)

Usually, for clustering tasks, we are not given labels, but since we do have labels for our dataset, we can use accuracy to determine how good our clusters are.

Label the prediction class for all the points in a cluster as the majority true label.

E.g. {a,a,b} would be labeled as {a,a,a}

What is the accuracy of the resulting labels?

0.790

0.893

0.702

0.933

Answer: 0.790

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

Q5. For the same clusters obtained in the previous question, calculate the rand-index. The formula for rand-index:

R=a+bCn2

where,

a = the number of times a pair of elements occur in the same cluster in both sequences.

b = the number of times a pair of elements occur in the different clusters in both sequences.

Note: The two clusters are given by: (1) Ground truth labels, (2) Prediction labels using clustering as directed in Q4.

0.879

0.893

0.919

0.933

Answer: 0.933

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

Q6. a in rand-index can be viewed as true positives(pair of points belonging to the same cluster) and

b as true negatives(pair of points belonging to different clusters). How, then, are rand-index and accuracy from the previous two questions related?

rand-index = accuracy

rand-index = 1.18×accuracy

rand-index = accuracy/2

None of the above

Answer: None of the above

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

Q7. Run BIRCH on the input features of MNIST dataset using Birch(nclusters=10,threshold=1).

What is the rand-index obtained?

0.91

0.96

0.88

0.98

Answer: 0.96

Q8. Run PCA on MNIST dataset input features with n components = 2. Now run DBSCAN using DBSCAN(eps=0.5,minsamples=5)

on both the original features and the PCA features. What are their respective number of outliers/noisy points detected by DBSCAN?

As an extra, you can plot the PCA features on a 2D plot using matplotlib.pyplot.scatter with parameter c=y−pred (where y−pred is the cluster prediction) to visualise the clusters and outliers.

1600, 1522

1500, 1482

1000, 1000

1797, 1742

Answer: 1797, 1742

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

More Weeks of INTRODUCTION TO MACHINE LEARNING: Click here

More Nptel Courses: Click here

Session: JAN-APR 2023

Course Name: Introduction to Machine Learning

Course Link: Click Here

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

Q1.Consider the following one dimensional data set: 12, 22, 2, 3, 33, 27, 5, 16, 6, 31, 20, 37, 8 and 18. Given k=3 and initial cluster centers to be 5, 6 and 31, what are the final cluster centres obtained on applying the k-means algorithm?

a. 5, 18, 30

b. 5, 18, 32

c. 6, 19, 32

d. 4.8, 17.6, 32

e. None of the above

Answer: d. 4.8, 17.6, 32

Q2. For the previous question, in how many iterations will the k-means algorithm converge?

a. 2

b. 3

c. 4

d. 6

e. 7

Answer: c. 4

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

Q3. In the lecture on the BIRCH algorithm, it is stated that using the number of points N, sum of points SUM and sum of squared points SS, we can determine the centroid and radius of the combination of any two clusters A and B. How do you determine the centroid of the combined cluster? (In terms of N,SUM and SS of both the clusters)

a. SUMA+SUMB

b. SUMA/NA+SUMB/NB

c. SUMA+SUMB/NA+NB

d. SSA+SSB/NA+NB

Answer: c. SUMA+SUMB/NA+NB

Q4. What assumption does the CURE clustering algorithm make with regards to the shape of the clusters?

a. No assumption

b. Spherical

c. Elliptical

Answer: a. No assumption

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

Q5. What would be the effect of increasing MinPts in DBSCAN while retaining the same Eps parameter? (Note that more than one statement may be correct)

a. Increase in the sizes of individual clusters

b. Decrease in the sizes of individual clusters

c. Increase in the number of clusters

d. Decrease in the number of clusters

Answer: b, c

For the next question, kindly download the dataset – DS1. The first two columns in the dataset correspond to the co-ordinates of each data point. The third column corresponds two the actual cluster label.

DS1: Click here

Q6. Visualize the dataset DS1. Which of the following algorithms will be able to recover the true clusters (first check by visual inspection and then write code to see if the result matches to what you expected).

a. K-means clustering

b. Single link hierarchical clustering

c. Complete link hierarchical clustering

d. Average link hierarchical clustering

Answer: b. Single link hierarchical clustering

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

Q7. Consider the similarity matrix given below: Which of the following shows the hierarchy of clusters created by the single link clustering algorithm.

a.

b.

c.

d.

Answer: b

Q8. For the similarity matrix given in the previous question, which of the following shows the hierarchy of clusters created by the complete link clustering algorithm.

a.

b.

c.

d.

Answer: d

These are Introduction to Machine Learning Week 10 Assignment 10 Answers

More Weeks of Introduction to Machine Learning: Click Here

More Nptel courses: https://progiez.com/nptel